- Virgin Media Community

- Forum Archive

- Hub 3 / Compal CH7465-LG (TG2492LG) and CGNV4 Late...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Hub 3 / Compal CH7465-LG (TG2492LG) and CGNV4 Latency Cause

- Mark as New

- Bookmark this message

- Subscribe to this message

- Mute

- Subscribe to this message's RSS feed

- Highlight this message

- Print this message

- Flag for a moderator

on 02-12-2016 05:31

Good Day Ladies and Gentlemen,

Greetings from the other side of the pond, so to speak. Over the last few weeks I've been perusing various user forums across North America and Europe for issues related to Intel Puma 6 modem latency. Of those forums, your Hub 3 stands out as yet another Puma 6 based modem where users see continuous latency no matter what site is used or what online game is played. Considering all of the problems that are on the go, the following information should be of interest to all Hub 3, Compal CH7465-LG and Hitron CGNV4 modem users. There is much more to post regarding this, so this is a start, to alert VM users as to the real cause of the latency and hopefully engage the VM engineering staff, via the forum staff, with Arris. I am surprised to see that there has been no mention on this board of users from other ISPs who are suffering the exact same issues with their modems, so, this may come as a surprise to some, and possibly old news to others.

So, the short story ........

The Hub 3 / Compal CH7465-LG (TG2492LG) & Hiton CGNV4 modems are Intel Puma 6 / 6 Media Gateway (MG) based modems. These modems exhibit high latency to the modem and high latency thru the modem. The latency affects all IPV4 and IPV6 protocols, so it will be seen on every internet application and game. The basic cause is the processing of the data packets thru a CPU software based process instead of thru the hardware processor / accelerator. It appears that a higher priority task runs periodically, causing the packet processing to halt, and then resume. This is observed as latency in applications and in ping tests to the modem and beyond. For the last several weeks, Hitron, along with Intel and Rogers Communications in Canada have been addressing the latency issue within the Hitron CGNxxx series modems. To date, only the IPV4 ICMP latency has been resolved. Although this is only one protocol, it does show that a Puma 6MG modem is capable of using the hardware processor / accelerator with good results. Currently Rogers is waiting for further firmware updates from Hitron which should include an expanded list of resolved protocol latency issues. For Arris modems, "Netdog" an Arris engineer indicated last week that Arris was onboard to address the issue for the Arris SB6190 modem. That should be considered as good news for any Arris modem (read Hub 3) user as Arris should be able to port those changes over to other Puma 6/6MG modems fairly quickly. This is not a trivial exercise and will probably take several weeks to accomplish. Note that there is no guarantee at this point that it is possible to shift all packet processing to the hardware processor / accelerator without suffering from any packet loss side effects. Time will tell if all of the technical issues can be resolved with the current hardware included in the Puma 6/6MG chipset. Last night, Netdog loaded beta firmware on selected test modems on the Comcast Communications network. As this was only done last night, it's too soon to tell what this version resolves and if it was successful or not. Netdog has contacts with staff at Comcast, Rogers, Charter and Cox Communications to fan out beta versions and modifications for testing. I'd say its time to add Virgin Media and/or Liberty Global to that group as well.

Recent activity:

Approx three weeks ago a DSLReports user, xymox1 started a thread where he reported high latency to an Arris SB6190 and illustrated that with numerous MultiPing plots. This is the same latency that I and other users with Rogers communications have been dealing with for months so it came as no surprise. As well as reporting via that thread, xymox1 took it upon himself to email several staff members at Arris, Intel, Cablelabs and others. The result of that campaign was Netdog's announcement, last week, that Arris was fully engaged at resolving the issue. That has led to last nights release of beta firmware, although as I indicated its too early to determine what the beta firmware resolves, if anything.

The original thread that xymox1 started is here:

Yesterday, DSLReports issued a news story covering the thread:

Today, Arris responded:

That response was also picked by Multichannel.com

http://www.multichannel.com/news/distribution/intel-arris-working-firmware-fix-sb6190-modem/409379

This is more news likely to appear in the next few days as additional tech and news staff pick up on this issue.

Hub 3 observations:

Like many others using a Puma 6/6MG modem, Hub 3 users are experiencing latency when they ping the modem, or ping a target outside of the home, game online or use low latency applications. The common misconception is that this is Buffer Bloat. It's not. Its most likely a case of the packet processing stopping while the CPU processes a higher priority task. The packet processing is done via the CPU no matter what mode the modem is operating in, modem mode or router mode and no matter what IPV4 or IPV6 protocol is used. Normally, the latency is just that, latency. The exception are UDP packets. In this case there is latency and packet loss. The result of that is delayed and failed DNS lookups, and poor game performance for games that use UDP for player/server comms or player/player comms.

Can this be fixed?

So far, it appears that the answer is yes. Rogers Communications issued beta firmware to a small group of test modems in October. This version shifted the IPV4 ICMP processing from the CPU to the hardware processor / accelerator, resulting in greatly improved performance in ping latency. At the present time we are waiting for the next version firmware which should shift other protocols over to the hardware processor / accelerator. That can be seen in the following post:

The details and results of last nights beta release to the Comcast group have yet to be seen.

At this point there is enough reading to keep most staff and users busy. My intention is to post some of the history leading up to this point and instructions on how to detect the latency and packet loss. This is not thru the use of a BQM. I had hoped to post this all at once but events are moving much faster than I had thought they would. For now this should suffice to get the ball rolling.

Below is a link to a post with a couple of HrPing plots from my 32 channel modem to the connected CMTS. This shows the latency that is observed and reflects what others have posted in this forum using Pingplotter and HrPing.

https://www.dslreports.com/forum/r31106550-

HrPing is one of the freebie applications that can be used to monitor the latency to and thru the modem.

Pingplots with Pingplotter which show the latency from my modem to the CMTS can be found in the first two to three rows of my online image library at Rogers Communications, located below. They are essentially what the BQM would look like if you were able to zoom into the plot to the point where you could see the individual ping spikes. Those ping spikes are common to Puma 6 and Puma 6MG modems.

http://communityforums.rogers.com/t5/media/gallerypage/user-id/829158

[MOD EDIT: Subject heading changed to assist community]

- Mark as New

- Bookmark this message

- Subscribe to this message

- Mute

- Subscribe to this message's RSS feed

- Highlight this message

- Print this message

- Flag for a moderator

14-12-2017 14:39 - edited 14-12-2017 14:51

@m01, the IPERF test will indicate the max UDP transfer rates and their losses but won't show the typical latency that you will experience within your ISP network. Here are some points to keep in mind:

1. Iperf shows a higher latency that is probably due to the distance from the test servers, so, maybe one can consider the results to resemble what you might see with a gaming site as it's a similar circumstance, UDP transfer over longer distances.

2. It's also not officially supported for Windows

3. You have to crank up the receive window on the test setting in order to see the max UDP transfer rate that your pc will run.

4. You have to run Iperf 3.17 or greater as previous versions will erroneously indicate high UDP losses. Fwiw, the latest Iperf info is found here:

https://github.com/esnet/iperf The release notes indicate that the current Iperf build

for non-windows platform is Version 3.4

Version 3.3 for windows can be downloaded here:

Iperf downloads can be found here along with the test site IP addresses and port numbers and command line instructions:

https://iperf.fr/ Note that this only has Iperf 3.16 for windows which shows higher than

actual transfer losses. Use the above site for Iperf 3.3 for Windows

Here are the commands for the receive and transmit test in case you want to give it a go:

Receive test

iperf3 -c iperf.he.net -p 5201 -u -l 1k -b 300m -w 510M -t 31 -O 1 -i 1 -f m -R -V --get-server-output

This runs a 31 second test, omitting the first second results. This runs a 1K UDP datagram at 300 Mb/s with a Windows receive size of 510 Mb. The results are printed every second. Personal opinion, the goal should be to determine where the test runs at a max rate with no datagram losses on the receive side. In order to do that, run the test as indicated above and look at the results which will show the Local Receiver stats, Summery Results and Server output stats. As you play with the test bandwidth "-b 300m" which is 300 Mb/s, you will see the losses in the Receiver stats and the Summery Results. Adjust that "-b 300m" up or down, keeping an eye on the losses in the Receiver stats and Summery results in order to determine a consistent point where you will end up with the highest transfer rate possible, given the modem and your pc capability. One quirk you will see is the split between the receiver and server stats. When the test runs with no losses and the data rate is low enough, you will see that the receiver and server transmit rates match up. As you push up the server bandwidth, either on your pc for the transmit test or for the server for the receive test, the server and receiver bandwidth numbers will diverge from one another but the losses will stay at 0 as you push up the bandwidth. I don't know if thats an issue with the Windows build only, or if its an issue with the original source code. With no losses and transmit and receive bandwidths that match up, those numbers should give you an accurate indication of what your modem and pc will run, in terms of max UDP rates with no losses. Fwiw, on my Puma 7 modem I can run 600 Mb/s down 50 Mb/s up without any losses. That's on a 1 gig down, 50 Mb/s up internet service. It's possible that the download rate might run higher if I was overclocking my pc.

Here's the transmit test:

iperf3 -c iperf.he.net -p 5201 -u -l 1k -b 50m -w 510M -t 31 -O 1 -i 1 -f m -V --get-server-output

Once again, a 31 second test, omitting the first second's results. The test is a 1k transfer test running at 50 Mb/s. To change the transmit rate, change the "-b 50m" to your internet plan upload rate and start there. You might be able to slightly exceed the stated upload rate of your plan.

The transfer size is 1k in both cases, matching the max transfer size that I had seen on a data capture for League of Legends. That data size varies from somewhere in the 50 byte range up to 1k. If one was to change the transfer size, the max data rate that is seen before any losses are observer should change, but, I've never taken the time to try it.

For UK test purposes, use the site addresses found at https://iperf.fr/ to determine the closest test server and port number. Change the "iperf.he.net -p 5201" setting to match the selected server.

Here is an example of the receiver tests, running on my old motherboard:

C:\Temp\iperf>iperf3 -c iperf.he.net -p 5201 -u -l 1k -b 100m -w 510M -t 31 -O 1 -i 1 -f m -R -V --get-server-output

iperf 3.3

CYGWIN_NT-10.0 WebPC 2.9.0(0.318/5/3) 2017-09-12 10:18 x86_64

warning: Ignoring nonsense TCP MSS -2146494208

Control connection MSS 0

Time: Thu, 14 Dec 2017 13:19:48 GMT

Connecting to host iperf.he.net, port 5201

Reverse mode, remote host iperf.he.net is sending

Cookie: deleted

[ 5] local 10.0.0.8 port 56451 connected to 216.218.227.10 port 5201

Starting Test: protocol: UDP, 1 streams, 1024 byte blocks, omitting 1 seconds, 31 second test, tos 0

[ ID] Interval Transfer Bitrate Jitter Lost/Total Datagrams

[ 5] 0.00-1.01 sec 10.8 MBytes 90.1 Mbits/sec 5.934 ms 0/11063 (0%) (omitted)

[ 5] 0.00-1.00 sec 11.3 MBytes 94.5 Mbits/sec 0.067 ms 0/11537 (0%)

[ 5] 1.00-2.00 sec 11.9 MBytes 99.9 Mbits/sec 0.067 ms 0/12192 (0%)

[ 5] 2.00-3.00 sec 12.2 MBytes 102 Mbits/sec 0.045 ms 0/12468 (0%)

[ 5] 3.00-4.00 sec 11.7 MBytes 98.0 Mbits/sec 0.067 ms 0/11964 (0%)

[ 5] 4.00-5.00 sec 11.8 MBytes 99.2 Mbits/sec 0.067 ms 0/12114 (0%)

[ 5] 5.00-6.00 sec 12.1 MBytes 101 Mbits/sec 0.045 ms 0/12388 (0%)

[ 5] 6.00-7.00 sec 11.4 MBytes 95.4 Mbits/sec 3.780 ms 0/11646 (0%)

[ 5] 7.00-8.00 sec 11.8 MBytes 99.3 Mbits/sec 0.079 ms 0/12128 (0%)

[ 5] 8.00-9.00 sec 11.7 MBytes 98.2 Mbits/sec 0.047 ms 0/11982 (0%)

[ 5] 9.00-10.00 sec 11.2 MBytes 93.8 Mbits/sec 0.080 ms 0/11455 (0%)

[ 5] 10.00-11.00 sec 12.1 MBytes 101 Mbits/sec 0.083 ms 0/12342 (0%)

[ 5] 11.00-12.00 sec 11.7 MBytes 97.8 Mbits/sec 0.815 ms 0/11934 (0%)

[ 5] 12.00-13.00 sec 11.9 MBytes 99.7 Mbits/sec 0.045 ms 0/12168 (0%)

[ 5] 13.00-14.00 sec 11.7 MBytes 97.9 Mbits/sec 0.067 ms 0/11953 (0%)

[ 5] 14.00-15.00 sec 12.1 MBytes 101 Mbits/sec 0.074 ms 0/12344 (0%)

[ 5] 15.00-16.00 sec 11.2 MBytes 94.2 Mbits/sec 0.078 ms 0/11499 (0%)

[ 5] 16.00-17.00 sec 11.4 MBytes 95.8 Mbits/sec 0.076 ms 0/11693 (0%)

[ 5] 17.00-18.00 sec 11.3 MBytes 95.2 Mbits/sec 0.046 ms 0/11620 (0%)

[ 5] 18.00-19.00 sec 12.6 MBytes 106 Mbits/sec 2.746 ms 0/12932 (0%)

[ 5] 19.00-20.00 sec 11.6 MBytes 97.1 Mbits/sec 0.074 ms 0/11854 (0%)

[ 5] 20.00-21.00 sec 12.1 MBytes 101 Mbits/sec 0.046 ms 0/12348 (0%)

[ 5] 21.00-22.00 sec 12.1 MBytes 101 Mbits/sec 0.077 ms 0/12387 (0%)

[ 5] 22.00-23.00 sec 12.1 MBytes 102 Mbits/sec 0.045 ms 0/12430 (0%)

[ 5] 23.00-24.00 sec 11.8 MBytes 99.0 Mbits/sec 0.064 ms 0/12091 (0%)

[ 5] 24.00-25.00 sec 11.3 MBytes 95.1 Mbits/sec 0.077 ms 0/11611 (0%)

[ 5] 25.00-26.00 sec 12.2 MBytes 102 Mbits/sec 0.069 ms 0/12447 (0%)

[ 5] 26.00-27.00 sec 12.8 MBytes 107 Mbits/sec 0.070 ms 0/13095 (0%)

[ 5] 27.00-28.00 sec 12.6 MBytes 106 Mbits/sec 0.047 ms 0/12937 (0%)

[ 5] 28.00-29.00 sec 13.6 MBytes 114 Mbits/sec 0.070 ms 0/13937 (0%)

[ 5] 29.00-30.00 sec 11.8 MBytes 98.7 Mbits/sec 0.067 ms 0/12051 (0%)

[ 5] 30.00-30.99 sec 12.0 MBytes 101 Mbits/sec 0.067 ms 0/12269 (0%)

- - - - - - - - - - - - - - - - - - - - - - - - -

Test Complete. Summary Results:

[ ID] Interval Transfer Bitrate Jitter Lost/Total Datagrams

[ 5] 0.00-30.99 sec 371 MBytes 100 Mbits/sec 0.000 ms 0/379481 (0%) sender

[ 5] 0.00-30.99 sec 371 MBytes 100 Mbits/sec 0.005 ms 0/379481 (0%) receiver

CPU Utilization: local/receiver 96.9% (17.5%u/79.5%s), remote/sender 5.1% (0.5%u/4.6%s)

Server output:

-----------------------------------------------------------

Accepted connection from xx.xxx.xxx.xxx, port 54882

[ 5] local 216.218.227.10 port 5201 connected to xx.xxx.xxx.xxx port 56451

[ ID] Interval Transfer Bandwidth Total Datagrams

[ 5] 0.00-1.00 sec 10.8 MBytes 90.6 Mbits/sec 11062 (omitted)

[ 5] 0.00-1.00 sec 10.8 MBytes 90.6 Mbits/sec 11060

[ 5] 1.00-2.00 sec 11.9 MBytes 100 Mbits/sec 12208

[ 5] 2.00-3.00 sec 11.9 MBytes 100 Mbits/sec 12208

[ 5] 3.00-4.00 sec 11.9 MBytes 100 Mbits/sec 12207

[ 5] 4.00-5.00 sec 11.9 MBytes 100 Mbits/sec 12205

[ 5] 5.00-6.00 sec 11.9 MBytes 100 Mbits/sec 12208

[ 5] 6.00-7.00 sec 11.9 MBytes 100 Mbits/sec 12206

[ 5] 7.00-8.00 sec 11.9 MBytes 100 Mbits/sec 12207

[ 5] 8.00-9.00 sec 11.9 MBytes 100 Mbits/sec 12208

[ 5] 9.00-10.00 sec 11.9 MBytes 100 Mbits/sec 12207

[ 5] 10.00-11.00 sec 11.9 MBytes 100 Mbits/sec 12207

[ 5] 11.00-12.00 sec 11.9 MBytes 100 Mbits/sec 12209

[ 5] 12.00-13.00 sec 11.9 MBytes 100 Mbits/sec 12215

[ 5] 13.00-14.00 sec 11.9 MBytes 100 Mbits/sec 12201

[ 5] 14.00-15.00 sec 11.9 MBytes 100 Mbits/sec 12206

[ 5] 15.00-16.00 sec 11.9 MBytes 100 Mbits/sec 12206

[ 5] 16.00-17.00 sec 11.9 MBytes 100 Mbits/sec 12208

[ 5] 17.00-18.00 sec 11.9 MBytes 100 Mbits/sec 12206

[ 5] 18.00-19.00 sec 11.9 MBytes 100 Mbits/sec 12207

[ 5] 19.00-20.00 sec 11.9 MBytes 100 Mbits/sec 12208

[ 5] 20.00-21.00 sec 11.9 MBytes 100 Mbits/sec 12207

[ 5] 21.00-22.00 sec 11.9 MBytes 100 Mbits/sec 12207

[ 5] 22.00-23.00 sec 11.9 MBytes 100 Mbits/sec 12207

[ 5] 23.00-24.00 sec 11.9 MBytes 100 Mbits/sec 12204

[ 5] 24.00-25.00 sec 11.9 MBytes 100 Mbits/sec 12207

[ 5] 25.00-26.00 sec 11.9 MBytes 100 Mbits/sec 12207

[ 5] 26.00-27.00 sec 11.9 MBytes 100 Mbits/sec 12207

[ 5] 27.00-28.00 sec 11.9 MBytes 100 Mbits/sec 12208

[ 5] 28.00-29.00 sec 11.9 MBytes 100 Mbits/sec 12206

[ 5] 29.00-30.00 sec 11.9 MBytes 100 Mbits/sec 12207

[ 5] 30.00-31.00 sec 11.9 MBytes 100 Mbits/sec 12208

So, no losses, but, the jitter number is rather interesting. Running a UDP test to my ISP DNS shows a much lower return time and as a result a much lower jitter if I were to calculate it. I can only surmise at this point that the jitter is the result of the distance to the test server.

Here's the upload test results:

C:\Temp\iperf>iperf3 -c iperf.he.net -p 5201 -u -l 1k -b 50m -w 510M -t 31 -O 1 -i 1 -f m -V --get-server-output

iperf 3.3

CYGWIN_NT-10.0 WebPC 2.9.0(0.318/5/3) 2017-09-12 10:18 x86_64

warning: Ignoring nonsense TCP MSS -2146494208

Control connection MSS 0

Time: Thu, 14 Dec 2017 13:38:53 GMT

Connecting to host iperf.he.net, port 5201

Cookie: deleted

[ 5] local 10.0.0.8 port 54865 connected to 216.218.227.10 port 5201

Starting Test: protocol: UDP, 1 streams, 1024 byte blocks, omitting 1 seconds, 31 second test, tos 0

[ ID] Interval Transfer Bitrate Total Datagrams

[ 5] 0.00-1.01 sec 5.93 MBytes 49.3 Mbits/sec 6077 (omitted)

[ 5] 0.00-1.01 sec 6.04 MBytes 50.1 Mbits/sec 6183

[ 5] 1.01-2.00 sec 5.96 MBytes 50.5 Mbits/sec 6098

[ 5] 2.00-3.00 sec 5.88 MBytes 49.3 Mbits/sec 6020

[ 5] 3.00-4.01 sec 6.01 MBytes 50.1 Mbits/sec 6150

[ 5] 4.01-5.00 sec 6.00 MBytes 50.7 Mbits/sec 6148

[ 5] 5.00-6.00 sec 5.96 MBytes 50.0 Mbits/sec 6104

[ 5] 6.00-7.00 sec 5.97 MBytes 50.0 Mbits/sec 6109

[ 5] 7.00-8.00 sec 5.95 MBytes 49.9 Mbits/sec 6096

[ 5] 8.00-9.00 sec 5.97 MBytes 50.1 Mbits/sec 6111

[ 5] 9.00-10.00 sec 5.96 MBytes 50.0 Mbits/sec 6102

[ 5] 10.00-11.00 sec 5.96 MBytes 50.0 Mbits/sec 6105

[ 5] 11.00-12.00 sec 5.96 MBytes 49.9 Mbits/sec 6101

[ 5] 12.00-13.00 sec 5.96 MBytes 50.0 Mbits/sec 6105

[ 5] 13.00-14.00 sec 5.96 MBytes 50.0 Mbits/sec 6104

[ 5] 14.00-15.01 sec 5.95 MBytes 49.5 Mbits/sec 6093

[ 5] 15.01-16.01 sec 5.97 MBytes 50.0 Mbits/sec 6113

[ 5] 16.01-17.00 sec 5.89 MBytes 50.0 Mbits/sec 6034

[ 5] 17.00-18.00 sec 6.03 MBytes 50.6 Mbits/sec 6171

[ 5] 18.00-19.00 sec 5.96 MBytes 50.0 Mbits/sec 6103

[ 5] 19.00-20.00 sec 5.96 MBytes 50.0 Mbits/sec 6101

[ 5] 20.00-21.00 sec 5.96 MBytes 50.0 Mbits/sec 6108

[ 5] 21.00-22.00 sec 5.96 MBytes 50.0 Mbits/sec 6104

[ 5] 22.00-23.00 sec 5.96 MBytes 50.0 Mbits/sec 6101

[ 5] 23.00-24.00 sec 5.96 MBytes 50.0 Mbits/sec 6107

[ 5] 24.00-25.00 sec 5.96 MBytes 50.0 Mbits/sec 6100

[ 5] 25.00-26.00 sec 5.96 MBytes 50.0 Mbits/sec 6105

[ 5] 26.00-27.00 sec 5.96 MBytes 50.0 Mbits/sec 6106

[ 5] 27.00-28.00 sec 5.94 MBytes 49.8 Mbits/sec 6081

[ 5] 28.00-29.00 sec 5.95 MBytes 50.0 Mbits/sec 6093

[ 5] 29.00-30.00 sec 5.99 MBytes 50.2 Mbits/sec 6129

[ 5] 30.00-31.00 sec 5.91 MBytes 49.4 Mbits/sec 6047

- - - - - - - - - - - - - - - - - - - - - - - - -

Test Complete. Summary Results:

[ ID] Interval Transfer Bitrate Jitter Lost/Total Datagrams

[ 5] 0.00-31.00 sec 185 MBytes 50.0 Mbits/sec 0.000 ms 0/189232 (0%) sender

[ 5] 0.00-31.00 sec 185 MBytes 50.2 Mbits/sec 0.141 ms 0/189185 (0%) receiver

CPU Utilization: local/sender 37.8% (5.7%u/32.0%s), remote/receiver 1.7% (0.2%u/1.5%s)

Server output:

Accepted connection from xx.xxx.xxx.xxx, port 55916

[ 5] local 216.218.227.10 port 5201 connected to xx.xxx.xxx.xxx port 54865

[ ID] Interval Transfer Bandwidth Jitter Lost/Total Datagrams

[ 5] 0.00-1.00 sec 5.21 MBytes 43.7 Mbits/sec 0.567 ms 0/5335 (0%) (omitted)

[ 5] 0.00-1.00 sec 5.95 MBytes 49.9 Mbits/sec 0.170 ms 0/6093 (0%)

[ 5] 1.00-2.00 sec 5.98 MBytes 50.2 Mbits/sec 0.181 ms 0/6122 (0%)

[ 5] 2.00-3.00 sec 5.96 MBytes 50.0 Mbits/sec 0.124 ms 0/6103 (0%)

[ 5] 3.00-4.00 sec 5.94 MBytes 49.8 Mbits/sec 0.137 ms 0/6081 (0%)

[ 5] 4.00-5.00 sec 6.04 MBytes 50.7 Mbits/sec 0.217 ms 0/6185 (0%)

[ 5] 5.00-6.00 sec 5.97 MBytes 50.1 Mbits/sec 0.183 ms 0/6116 (0%)

[ 5] 6.00-7.00 sec 5.95 MBytes 49.9 Mbits/sec 0.171 ms 0/6096 (0%)

[ 5] 7.00-8.00 sec 5.97 MBytes 50.0 Mbits/sec 0.186 ms 0/6109 (0%)

[ 5] 8.00-9.00 sec 5.96 MBytes 50.0 Mbits/sec 0.189 ms 0/6106 (0%)

[ 5] 9.00-10.00 sec 5.96 MBytes 50.0 Mbits/sec 0.157 ms 0/6108 (0%)

[ 5] 10.00-11.00 sec 5.97 MBytes 50.1 Mbits/sec 0.153 ms 0/6111 (0%)

[ 5] 11.00-12.00 sec 5.94 MBytes 49.9 Mbits/sec 0.197 ms 0/6086 (0%)

[ 5] 12.00-13.00 sec 5.96 MBytes 50.0 Mbits/sec 0.153 ms 0/6102 (0%)

[ 5] 13.00-14.00 sec 5.97 MBytes 50.1 Mbits/sec 0.215 ms 0/6116 (0%)

[ 5] 14.00-15.00 sec 5.90 MBytes 49.5 Mbits/sec 0.152 ms 0/6040 (0%)

[ 5] 15.00-16.00 sec 5.97 MBytes 50.1 Mbits/sec 0.143 ms 0/6114 (0%)

[ 5] 16.00-17.00 sec 5.96 MBytes 50.0 Mbits/sec 0.198 ms 0/6101 (0%)

[ 5] 17.00-18.00 sec 6.01 MBytes 50.4 Mbits/sec 0.154 ms 0/6152 (0%)

[ 5] 18.00-19.00 sec 5.96 MBytes 50.0 Mbits/sec 0.173 ms 0/6107 (0%)

[ 5] 19.00-20.00 sec 5.97 MBytes 50.1 Mbits/sec 0.215 ms 0/6115 (0%)

[ 5] 20.00-21.00 sec 5.96 MBytes 50.0 Mbits/sec 0.231 ms 0/6099 (0%)

[ 5] 21.00-22.00 sec 5.95 MBytes 49.9 Mbits/sec 0.183 ms 0/6093 (0%)

[ 5] 22.00-23.00 sec 5.96 MBytes 50.0 Mbits/sec 0.200 ms 0/6105 (0%)

[ 5] 23.00-24.00 sec 5.97 MBytes 50.0 Mbits/sec 0.160 ms 0/6109 (0%)

[ 5] 24.00-25.00 sec 5.97 MBytes 50.1 Mbits/sec 0.232 ms 0/6112 (0%)

[ 5] 25.00-26.00 sec 5.96 MBytes 50.0 Mbits/sec 0.209 ms 0/6107 (0%)

[ 5] 26.00-27.00 sec 5.94 MBytes 49.8 Mbits/sec 0.187 ms 0/6079 (0%)

[ 5] 27.00-28.00 sec 5.89 MBytes 49.4 Mbits/sec 0.125 ms 0/6029 (0%)

[ 5] 28.00-29.00 sec 5.99 MBytes 50.2 Mbits/sec 0.191 ms 0/6131 (0%)

[ 5] 29.00-30.00 sec 5.93 MBytes 49.7 Mbits/sec 0.153 ms 0/6072 (0%)

[ 5] 30.00-31.00 sec 5.98 MBytes 50.2 Mbits/sec 0.116 ms 0/6126 (0%)

iperf Done.

Notice the jitter numbers at the server site which in this case is the receiving end. Those numbers are very high, at least in my consideration. Knowing that the normal UDP response time within my ISP network is in the 8 to 13 ms range with occasional spikes into that higher range for heavy traffic times, I don't pay much attention to the jitter numbers. At this point I'm interested in the losses, and where they start to become problematic, that is to say, no losses up to a given data transfer rate, and losses above that point. Given that the losses for my combination of Puma 7 modem and faster pc don't start until I reach 600 Mb/s down and 50 Mb/s up, and combined with the low latency for two way UDP traffic, I'm satisfied with the Puma 7 UDP performance at this point. Its always possible that with additional testing or a different test approach that I might change my mind on this.

Ok, having said all of that, here is another approach that I've been working on, which is to use an application to run a UDP query to the ISP Domain Name Server. This approach as stated in the following link runs a query to the DNS once a second, which should still be sufficient to see Max query times occurring, similar in nature to running an ICMP ping every second. It runs an application titled DNS Monitor which can be downloaded and run in Demo mode, allowing you to query the VM DNS with a site name query, ie, www.google.co.uk, www.facebook.co.uk, etc, etc. The data is captured with Wireshark, allowing you to display the statistical data and plot the return times as a plot. The difference between this approach and that taken by pingplotter for example is that this runs UDP traffic in both directions, just as you would see with a game. Pingplotter runs a UDP ping out, and ICMP return unless you happen to have a co-operating server at your disposal which allows you to run a two way UDP test. Very few people have that at their disposal, but, ISP customers do have access to the ISP DNS.

http://www.dslreports.com/forum/r31737637-

So, fwiw, this is step one. I'll be reposting these instructions on another dslreports forum along with instructions to run a scripted query, allowing the test to run in high speed, with multiple queries per second. I don't have that written out in a form that I can post just yet but hopefully it won't take me long to do this.

Hope this helps. I'd be interested in seeing a plot from a Puma 6 modem if anyone decides to give this a go.

- Mark as New

- Bookmark this message

- Subscribe to this message

- Mute

- Subscribe to this message's RSS feed

- Highlight this message

- Print this message

- Flag for a moderator

on 14-12-2017 14:45

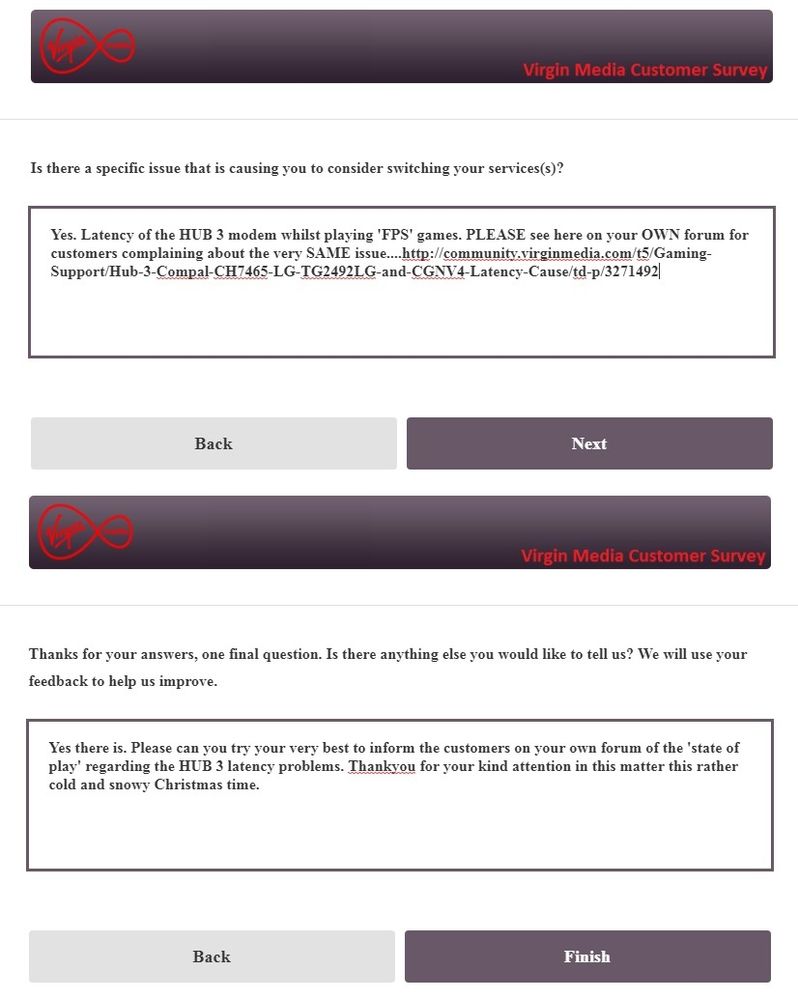

I was sent a questionaire which was duly completed.

I hope Virgin take on board my concerns.

I post them here for your delectation this fine frosty Christmas time.

- Mark as New

- Bookmark this message

- Subscribe to this message

- Mute

- Subscribe to this message's RSS feed

- Highlight this message

- Print this message

- Flag for a moderator

on 14-12-2017 14:48

Thanks 'Datalink' for that info. Wish you lived next to me....![]()

- Mark as New

- Bookmark this message

- Subscribe to this message

- Mute

- Subscribe to this message's RSS feed

- Highlight this message

- Print this message

- Flag for a moderator

on 14-12-2017 15:15

So if i do get a superhub 3 forced on me, if i buy a R7000 will this almost solve the issue? (i.e turning superhub 3 into modem mode?)

- Mark as New

- Bookmark this message

- Subscribe to this message

- Mute

- Subscribe to this message's RSS feed

- Highlight this message

- Print this message

- Flag for a moderator

on 14-12-2017 15:19

Chrisnewton probably not, as you don't have beta firmware.

- Mark as New

- Bookmark this message

- Subscribe to this message

- Mute

- Subscribe to this message's RSS feed

- Highlight this message

- Print this message

- Flag for a moderator

14-12-2017 15:21 - edited 14-12-2017 15:24

Nope. The problem is the packet processing by the CPU instead of the hardware accelerator/processor, combined with the unknown task that fires up every 1.9 seconds or so, which apparently causes the modem to buffer the outgoing and coming data when the task runs and then process that data when the unknown task is complete. That's the cause of the high latency that you see in the BQM. Its the same situation for router mode and modem mode. The beta firmware changes the ICMP packet processing from its current CPU processing to hardware accelerator/processor processing.

- Mark as New

- Bookmark this message

- Subscribe to this message

- Mute

- Subscribe to this message's RSS feed

- Highlight this message

- Print this message

- Flag for a moderator

on 14-12-2017 15:31

Looks like the github iperf link above doesn't work for some reason. Here's a link that should work:

https://github.com/esnet/iperf

- Mark as New

- Bookmark this message

- Subscribe to this message

- Mute

- Subscribe to this message's RSS feed

- Highlight this message

- Print this message

- Flag for a moderator

on 14-12-2017 15:31

- Mark as New

- Bookmark this message

- Subscribe to this message

- Mute

- Subscribe to this message's RSS feed

- Highlight this message

- Print this message

- Flag for a moderator

on 14-12-2017 15:35

No idea, few people were selected and stayed like that, you will probably have to wait till they push stable version out, or if they release a new hub.

- Mark as New

- Bookmark this message

- Subscribe to this message

- Mute

- Subscribe to this message's RSS feed

- Highlight this message

- Print this message

- Flag for a moderator

on 14-12-2017 16:00

Yeah, pretty much this. Even with me complaining with a lot of evidence behind me they'd prefer giving me a tenner off or let me go external with my complaint rather than just pushing new firmware to my Hub.

Forever waiting for the Hub 4